ENS’s true power lies in the Resolver. Resolver is essentially an adaptor for a name and can act as an Account Abstraction hook; it is extremely capable. But it hasn’t been used as much until very recently, except by our team and by zkMoney for stealth payments. Gas was always the biggest issue standing in the way of ENS adoption, not onboarding, not wrapped subdomains, etc etc. Wrapped subdomains haven’t taken off simply because the gas fees to wrap them are not worth the upside. Why will a large org force its users to pay crazy gas to use a name when they can just as easily use CCIP-Read and make off-chain registries. CB.ID did just that. More will follow. Off-chain records are here to stay, only a matter of who implements them efficiently first. CCIP2 will take gas out of the equation while removing the kinks in the off-chain record management infrastructure. Records will now be free and smoothly accessible.

Sounds very cool! Thanks for all links need to dive deeper (again hah) Don’t know much about Nostr, heard lot about it tho! I need to start following you and need to look into what @neiman doing too he’s always up to cool stuff I think. Read up on IPNS. Gasless records sounds like heaven.

Sorry for my ignorance lol but so practically users would need to update their resolver (transaction) and then they will be able to sign messages updating the contenthash? (free) And where would users set these records? Or would dapps integrate this?

And @isX this all is very cool. Never really thought about it like that. What @jefflau.eth said also kinda blew my mind haha. Probably went a lot of thought into cb.id too! Hope more do follow!

So you both think basically all on chain text record setting won’t be necessary? And it will all move to off chain registries basically? Via contenthash? And there’s no downsides to that? Certain records (like socials) still should be more ‘visible’ than others. I imagine it would be possible to separate/indicate such preferences?

Are there any risks to losing these off chain records? And if it somehow all would fail people could just set up with a new resolver? For a showcase this doesn’t really matter I guess but it sometimes might. (bit unsure about this part as I would first assume onchain better/more secure but might be overkill I guess)

I would go crazy setting records without gas fees, can tell you that for sure haha. And obviously the different types of records people would set and would experiment with would go UP too.

Are there any timelines on this? Know ccip was ready(?)

And to tie it all back to the showcase/favorites record. Is this the way to go? Would it still be as easy as resolving a name for any app to integrate the showcase?

Correct, you can already take the demo client for a spin on Goerli: https://ccip2.eth.limo

- Users will have to pay one-time gas fees to migrate their current public Resolver to CCIP2 and to set their global contenthash. All records from then on will be stored in the user’s contenthash that they just set. These records are free and infinitely update-able.

Whenever a user sets new records (on https://ccip2.eth.limo), their signature is attached with the records. Upon each resolution, the realtime signature will be matched with the provided signature. This will avoid bad resolutions.

These records can be set on our client https://ccip2.eth.limo. ENS App doesn’t support viewing or editing off-chain records.

No need for anyone to integrate anything. Our stack is based on ENSIP-10 and ENSIP-10 is already integrated.

You can do anything you want. World is your oyster and there are no gas fees. Resolvers are highly programmable. No downsides other than the records being off-chain, which is not really a downside but an aspect that can be optimised. An expensive on-chain registry is not worth more than a free off-chain registry, provided that security is not compromised.

You can “lose” your records if your contenthash disappears from the IPFS network, i.e. basically if your off-chain data disappears. That can be easily taken care of. There are plenty of free IPFS providers that users can additionally pin their data, besides the data hosting that we will provide through our IPFS node. A better question would be: are my records secure and uncompromisable? Yes, that’s why we will:

-

make you sign your records so that they stop resolving if your name changes ownership.

-

your IPNS keys will be generated client-side in browser and pinned through

w3nameprovider; this way your IPNS private keys remain with you and never leave your browser.

2-3 weeks maximum. Our client is ready. Contracts are being tested. We’ll full send soon

Boooom ![]()

![]()

Thanks for answering! This almost sounds too good to be true ![]()

![]() But yeah if dwebsites work similarly, why not records too? Offchain seems fine tbh and secure enough how you explain it, definitely with how records are used right now (simple info). But again (as probably is clear by now haha) I am no expert, so it’s hard for me to exactly consider the pros and cons. The wildcard resolution is very cool. I’ve read it before but never really realised the full implications and possibilities I guess. Super exciting!

But yeah if dwebsites work similarly, why not records too? Offchain seems fine tbh and secure enough how you explain it, definitely with how records are used right now (simple info). But again (as probably is clear by now haha) I am no expert, so it’s hard for me to exactly consider the pros and cons. The wildcard resolution is very cool. I’ve read it before but never really realised the full implications and possibilities I guess. Super exciting!

Just to confirm, so if I can do anything I want and these gasless ccip2 records will show up anywhere without any sort of extra integration (besides ensip10), dapps can choose to resolve part of the records and users can set any sort of record? Address, avatar, socials, any custom text record basically?

Take the showcase for example? Wouldn’t there still need to be some standard? Especially as different addresses will use different resolvers. And content is set in different places. Or is something like

enough? And would it kinda be an ‘informal’ standard?

And if so would such a record be something you would consider let people easily set?

In hindsight, it will be the thing in plain sight that should have been built on sooner ![]()

Correct. Off-chain resolution is already integrated in ethers.js and there is no additional integration required. Standard ENS integration used by dApps comes with CCIP-Read feature. That’s why CB.ID works seamlessly for Coinbase users.

Anything.

Currently, setting a text record of this nature will cost you $30 at 50 GWEI gas with only 2 NFTs in the showcase. It is unusable. With CCIP2, you can go crazy and add hundreds probably.

And would it kinda be an ‘informal’ standard?

We plan to propose this as an ENSIP standard. Here is the work-in-progress draft: https://github.com/namesys-eth/ccip2-resources/blob/main/docs/ENSIP-15.md

@0xc0de4c0ffee already teased it before here in the forum: Support IPLD Contenthash - #6 by 0xc0de4c0ffee

(simple info)

Absolutely. That’s all it is: securely hosted info, and ENS is no more than an on-chain database. On-chain secures this info with gas, we’ll secure this info with signatures.

Anything.

Amazing!! I think you are right that free/cheaper record setting would be great for ENS. And there’s always still the option to set them on chain I guess. I have/am reading up on all of your stuff! ![]()

I believe too tho that another reason for the lack of record setting is that people see no benefits. And I think a showcase (if widely implemented) would be interesting to some.

Still the question remains:

Should there be a standard on how to format the showcase? So wherever the record is set, offchain/L2/onchain, it is formatted the same? And so there is only one type of showcase record for dapps to look for. I think the answer is Yes.

Although everybody has suggested very similar formats, it seems like having no exact standard could cause problems/ having one is inevitable. And as a part of your ENSIP-15 seems a bit out of context?

But then, what is the best path for the showcase to be adopted?

Should some apps just start setting/resolving these custom records or should the standard first be formalised within ENS? Case to be made for both I guess. But probably the latter, so things like this won’t get overlooked:

expected behavior when a user transfers one of the NFTs out of their wallet.

Whole ENS-IP seems a bit extreme to me tho but I guess that is just how stuff works?

Will try to get more feedback from NFT and ENS users, and sites that show NFTs. As in the end they would need to be convinced to implement this anyway I guess.

Like what if you’d want to make the reverse-- a ‘least favorite nft’ section.

So nfts you hide in one place get hidden everywhere else, or listed at the bottom of all your nfts. Many people already hide nfts on opensea for multiple reasons.

Obv gas costs are expensive rn I get that.

This could maybe use the same standard I guess. Just worth considering how ENS could help formalize/push new records. There are many dapps with custom actions that could probably be used across crypto/ integrated with ENS already.

But I will keep it simple and just focus on this specific record for now.

I believe too tho that another reason for the lack of record setting is that people see no benefits. And I think a showcase (if widely implemented) would be interesting to some.

I will set records on all 65 of my names if they were free. Plenty of benefits, only gas standing in the way.

Should there be a standard on how to format the showcase? So wherever the record is set, offchain/L2/onchain, it is formatted the same? And so there is only one type of showcase record for dapps to look for. I think the answer is Yes.

Yes, there should be a standard. Otherwise, there’ll be too much variance to accommodate. We’ll propose the ENSIP and see where it goes.

as a part of your ENSIP-15 seems a bit out of context?

I wouldn’t say so; it seems well within context. You can come join us on Github discussions and open an issue there: namesys-eth · Discussions · GitHub. We’ll discuss further there.

Whole ENS-IP seems a bit extreme to me tho but I guess that is just how stuff works?

To make the experience smooth for users, there are 2-3 moving parts and at least one novel implementation:

-

Generates IPNS private keys in real time on client-side. Thanks to this, user never shares their IPNS key with anyone. Having to share IPNS private key was one of the biggest drawback in all IPNS services so far. Literally none of them provided hands-off service so far until we came along. This part makes up majority of the ENSIP.

-

We have separated out the global contenthash (that hosts your usual “web-contenthash” + new records) from the “web-contenthash”. The reason for this is that we don’t want users to ruin their records every time they update their web-contenthash since they’ll be hosted in the same IPNS key. That’ll be bad design. So we made way for a bypass which needs to be defined explicitly in detail.

ENSIP should describe everything in sufficient detail which may make it a tedious read. We’ll draft easier docs for the layman too. All in good times.

I will set records on all 65 of my names if they were free. Plenty of benefits, only gas standing in the way.

This is real. Still think convincing people/providing clear benefits is part too. Just look at the amount of primary names set (520k) versus avatars (76k) or contenthash (20k).

I wouldn’t say so; it seems well within context. You can come join us on Github discussions and open an issue there: namesys-eth · Discussions · GitHub . We’ll discuss further there

Amazing @isX I’d really love to see this happen!! I’ll reach out to you ![]() Should there maybe then be some type of request off the community for other new records, so those might be implemented and specified in this ENSIP too?

Should there maybe then be some type of request off the community for other new records, so those might be implemented and specified in this ENSIP too?

Yeah easier docs will definitely help, and seeing it in action too ![]()

Love to hear what other people and commenters like @zadok7 @jefflau.eth @liubenben @cory.eth think about this too. Or just leave a like ![]()

L2 resolvers are very much in active development! Some of those developments were discussed in the most recent ENS Town Hall Q1 2023.

Should there maybe then be some type of request off the community for other new records, so those might be implemented and specified in this ENSIP too?

When the ENSIP is proposed as a TempCheck, it will give sufficient time for the community to request features during the review stage. If I was you or anyone else who wants a gasless feature, I’ll watch out for the ENSIP-15 ENSIP-16 and keep an eye on our GitHub ![]()

P.S. CCIP2.eth is layer-agnostic Resolver. It works on any layer. It is especially designed for the L1 Mainnet but can be deployed as-is on any EVM Layer.

Just throwing some ideas out there:

For data like a showcase, I think I’d want the text record to be default interpreted as a URI. That way you can get quasi record-level CCIP read via fetch or IPFS resolution.

IMO, a showcase standard is probably best defined as ordered list of NFT descriptors (using something like the ENS Avatar spec), where showing your “Top 5 NFTs” is simply a matter of taking the first 5 and displaying them.

However, storing multiple URI descriptors in records is pretty wasteful as a single value probably spans multiple storage slots. There could be a short placeholder URI, like self, where setText("showcase", "self") is batch resolved by taking every record that returned self, and making a single HTTP GET to the URL returned by text("com.self") with params for records that returned self along with the node.

For example:

// set

setText("com.self", "https://raffy.com/")

setText("showcase", "self");

// get

let self_url = getText("com.self");

let texts = ['showcase']; // records that returned self

let url = new URL(self_url);

url.searchParams.set('node', node);

for (let key of texts) url.searchParams.append('text', key);

let records = fetch(url).then(r => r.json());

// { "text": { "showcase": [...] } }

However, this would still require a fresh storage slot per record to allocate the self record, so maybe the response could return additional records too:

Request: GET https://raffy.com/?node=0x123..456&text=showcase

Response: "application/json" { "text": { "showcase": [...], "name": "Raffy" } }

Additional idea: the self URI could have an security overload like self:[hash-prefix] where the leading bytes of keccak256(bytes of value) match the provided hash (which permits hosting static bits on lesser quality hosts.)

Additional idea: the parent URI could use the shared text("com.self") from the parent node. Or, the default com.self provider could be https://<name>.limo/profile.json.

The name self is just a placeholder, it could be a single control character.

We’re trying hard not to break anything on active ENS/IP specs so we don’t recommend reading json directly from eth.limo/… that’ll require new ENSIP specs. We use “randomized” list of public IPFS gateways as CCIP gateways to stay “decentralized” and read everything as “normal” CCIP’d ENS records.

function text(bytes32 node, string calldata key) external view returns (string memory value);

// get

let provider = new BrowserProvider(window.ethereum)

let resolver = await provider.getResolver("broke.eth")

let showcase = await resolver.getText("showcase")

// set

let _data = "data:application/json,..." // actual json string to resolve

// signed by owner/manager

let showcase_json = {"data": "<Resolver.__callback.selector + abi.encode(abi.encode(_data), signature, signer)>"}

// "data": here is CCIP-read specs

We’re testing “IPNS+IPFS” for storage pointer, & making sure we don’t break future IPNS+IPLD compatibility. (Support IPLD Contenthash - #7 by 0xc0de4c0ffee)

Text record’s key “showcase” is used as .json stored in .well-known directory format https://ipfs-gateway.tld/ipns/f<ipns-hex>/.well-known/eth/broke/showcase.json

Reverse eth/domain/sub.. directory format supports * wildcard subdomains records in same storage, e.g, https://ipfs-gateway.tld/ipns/f<ipns-hex>/.well-known/eth/broke/not/avatar.json for not.broke.eth’s avatar.

Hi hi @raffy thanks for commenting! And sorry for my late reply I had some stuff come up… I really am trying to understand the whole technical side of this with integrating ens and resolvers etc more deeply because I’d love for this to happen, but I am in def in no place to realistically reply to your suggestions. Why do you think the text record being default interpreted as a URI would be best? And what do you think about @0xc0de4c0ffee’s response? And thoughts on their idea generally?

(I love the broke.eth example btw)

Their suggestion seems super smart to me. And the other record idea too! But maybe @isX you could explain simply one more time again for me (sorry) how with your current idea a user on one dapp, let’s say Opensea, would set a ENS showcase record there and for another dapp, like Coinbase profiles, to showcase those nfts. And what would need to be done by both to make that happen.

Offchain ENS seems to be getting more common, and a focus of ENS too (cb .id and now even offchain ENS names) but it still seems like a lot of custom work. @serenae’s new EIP suggestion might be relevant for such a showcase record? Or @matoken.eth’s reply of @jefflau.eth ENSIP on ccip metadata? Let’s see what happens with these three proposals. I think like @isX and @0xc0de4c0ffee that the showcase might be an interesting use case for all of this, as it’s relevant to many existing dapps. And really takes advantage of no (/less) gas fees. I will keep trying to figure out how this all works-- very cool.

More generally regarding the showcase— most platforms I spoke with seem to (really) like the idea of an ENS showcase. But it’s hard to go much deeper without exact technical details on how they would implement this. Other dapps specifically focused on NFT curation are bit more hesitant tho. But I think integrating a ENS showcase might be a chance to make their product even better. Show more nice looking accounts. Having their own resolver or integrating a good one? Set this record directly in app. Adding additional context? Focus more on UI? etc

ENSers please excuse me again for the long (and ignorant) message, with so many questions and loose ends. It might not seem like it but I really am trying to figure out how all this works outside of this thread too haha. I’m really thankful for all the feedback and replies <3

p.s if anybody has any more specific comments regarding the formatting of the record please comment or lmk!

Can you clarify for me, what exactly is the benefit of this scheme over generic URIs? My claim would be that since we already need to fetch the avatar, additional client-logic seems fine.

TBH, I’ve always been skeptical of the contenthash mechanic (and encoded coinType addr() records), as they seem like huge technical burdens when simpler, generic encodings get you nearly the same thing (reduced storage).

For a person using a wildcard resolver, storage is a non-issue. I’d expect future efficiency thru a “multicall” ccip or “multicall” ExtendedResolver (single fetch). However, for a person using an on-chain resolver, it seems desirable to mix on-chain and external records. The question is how to reference them efficiently and at what “logic layer” are they resolved (re: avatar.)

My quarter-baked idea above about self was few things mixed together: (1) sharing some subdata between multiple records, (2) parallelizing the access (single fetch), and (3) allowing record-level partial content hashes for integrity (opt-in).

Again, just throwing ideas out there:

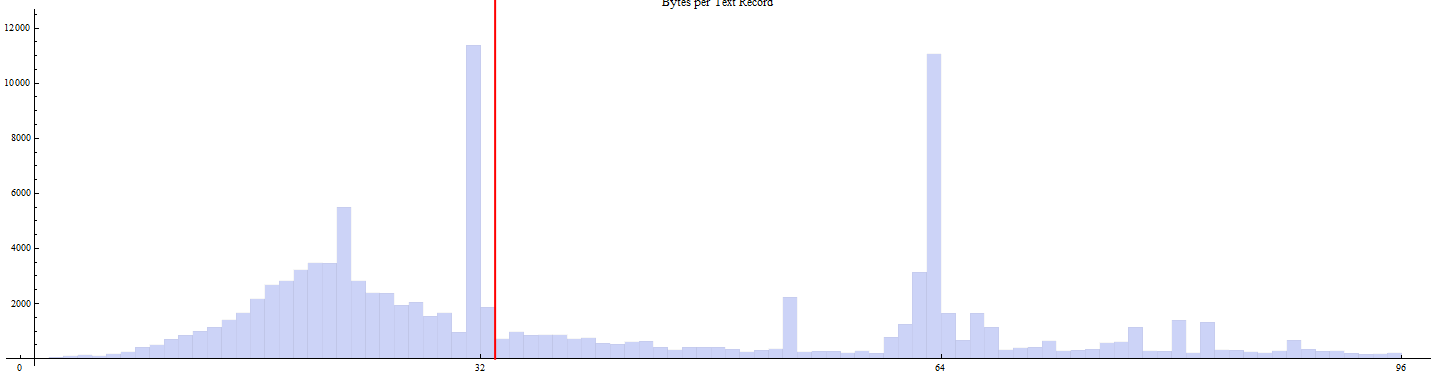

(1) We should optimize the Public Resolver contract for more efficient storage. At the moment, you pay 1 slot for 1-31 bytes, 2 slots for 32 bytes, and 3 slots for 33-64 bytes.

I did a quick inventory on the Public Resolvers for “avatar”, “description”, “email”, and “url”. The median record is 33 bytes, which means half of all text records consume an extra sstore (20000 gas) for no reason.

(2) What if we create an ENS Text Record URI, like the avatar string, but more general:

For reference, avatar string: eip155:1/eip1155:contract/token

What about: enstxt:[chain]/[ens]/[text-key], eg. enstxt:1/raffy.eth/url

This resolves by ENS resolving "raffy.eth" on mainnet and returning the text record for "url"

If you couple this with a helper contract that uses EIP-165 to auto-detect contract type and generate the avatar string dynamically, then:

enstxt:1/1.azuki.nft-owner.eth/avatar → eip155:1/erc721:0xed5af388653567af2f388e6224dc7c4b3241c544/1

(3) We should have self-referencing resolver records:

Either, allow records to recursively refer to themselves through some template mechanism that’s resolved client-side:

url = "https://raffy.antistupid.com"

avatar = "{url}/avatar.img"

Or, just implement the same template logic into the resolver itself:

url = <lit:28:"https://raffy.antistupid.com">

avatar = <ref:3:"url"><lit:11:"/avatar.img">

This could also support hex encoding:

$eth = <hex:20:0x51050ec063d393217B436747617aD1C2285Aeeee>

This would allow collapsing addr and text into the same storage mechanism.

Pseudo-code 👈️

// use efficient storage tech instead of default solidity bytes/string storage

setRaw(bytes32 node, string key, bytes memory v) { ... }

raw(bytes32 node, string key) returns (bytes) {}

uint256 constant LIT = 0;

uint256 constant HEX = 2;

uint256 constant SELF_REF = 3; // same resolver, same node

uint256 constant NODE_REF = 4; // same resolver, diff node (NAME_REF might be better in all cases)

uint256 constant NAME_REF = 5; // diff resolver, diff node

function concat(tuple(uint8 type, bytes data)[]) pure returns (bytes) {

// return bytes that can be decoded by expand()

}

expand(bytes32 node, bool text, bytes memory v) returns (bytes) {

// treat the input as a sequence of tokens, of the following types:

// 1. LIT(eral): data = consume(readLen())

// eg. <lit:5:"raffy">

// 2. HEX: data = consume(readLen(),

// eg. <hex:2:0xABCD>

// convert to "0xABCD" if text is true

// 3. SELF_REF(erence): data = expand(node, string(consume(readLen())))

// eg. <ref:3:"url">

// 4. NODE_REF(erence): data = expand(consume(32), string(consume(readLen()))

// eg. <noderef:0x123...:6:"avatar">

// 5. NAME_REF(erence):

/// eg. <nameref:"10:raffy2.eth":6:"avatar">

// node = namehash(consume(readLen());

// resolver = SmartResolver(ens.resolver(node));

// key = string(consume(readLen()));

// data = resolver.expand(node, text, resolve.raw(node, key));

//

// return the concatenated result

}

addr(bytes32 node, uint256 coinType) returns (bytes) {

// convert coinType to a record key + coder contract

(string key, AddrCoder coder) = coderFromCoinType(60)

// read the raw record, expand it, decode it

return coder.decode(coinType, expand(node, false, raw(node, key)));

}

text(bytes32 node, string key) returns (string) {

// read the raw record, expand it as a string

return string(expand(node, true, raw(node, key)));

}

interface AddrCoder {

function decode(uint256 coinType, bytes memory v) pure returns (bytes memory);

function encode(uint256 coinType, bytes memory v) pure returns (bytes memory);

}

// allow "eth" addr record to be accesses via "$eth" too

// EthCoder contract enforces 20 bytes and applies address checksum

defineAddrCoder(60, "$eth", <address of EthCoder contract>);

// traditional setters are just wrappers around setData()

setAddr(node, coinType, bytes value) {

(string key, AddrCoder coder) = coderFromCoinType(coinType)

// this would be inefficient since you can run the coder offchain

setRaw(node, key, coder.encode(value));

}

setText(node, key, string value) {

setRaw(node, key, concat([(LIT, bytes(value))]);

}

// new setters

setHex(node, key, bytes value) => setRaw(node, key, concat([(HEX, value)]));

Example usage:

node = namehash("raffy.eth")

// set url traditionally

setText(node, "url", "https://raffy.antistupid.com")

// set coinType via hex setter

setHex(node, "$eth", '0x51050ec063d393217B436747617aD1C2285Aeeee')

// both work:

text(node, "$eth") => "0x51050ec063d393217B436747617aD1C2285Aeeee"

addr(node, 60) => 0x51050ec063d393217B436747617aD1C2285Aeeee

// set avatar via reference

setRaw(node, "avatar", concat([(SELF_REF, "url"), (LIT, "/ens.jpg")]));

text(node, "avatar") => "https://raffy.antistupid.com/ens.jpg"

Can you clarify for me, what exactly is the benefit of this scheme over generic URIs? My claim would be that since we already need to fetch the

avatar, additional client-logic seems fine.

{data:"0x__bytes"} format is from CCIP specs to read off-chain records like “normal” on-chain ENS records, It’s data:.. uri prefixed json string, so works ok with normal url pointing to json (similar to erc721/1155 token URI functions). It’s all off-chain records format so we don’t have to count $gas cost.

Our current resolver design is testnet ready.

https://github.com/namesys-eth/ccip2-eth-resolver/blob/main/src/Resolver.sol

TBH, I’ve always been skeptical of the

contenthashmechanic (and encoded coinTypeaddr()records), as they seem like huge technical burdens when simpler, generic encodings get you nearly the same thing (reduced storage).

Current multicodec contenthash is best format out there, old content was bytes32… coinType/SLIP44 is simple compared to reverse records management loop and gas cost for a brand new subdomain. ![]()

I was looking into plaintext/data URIs as contenthash so we could run <0xaddr>.<balanceof>.mytoken.eth subdomain resolver with on-chain json/svg/html type generator to dynamically generate contenthash request for that as abi.encode(string("data:application/json,{balance : <balance of 0x addr>, ...})).

There’s also bytes3(“pla”) prefix draft in multicodec for plaintext but I think it’s better to use bytes5(“data:”) as prefix identifier if there’s such ENSIP in future.

- plaintextv2 multiaddr 0x706c61 draft

multicodec/table.csv at master · multiformats/multicodec · GitHub

ccip2-eth-resolver/Resolver.sol at main · namesys-eth/ccip2-eth-resolver · GitHub

If I understand this correctly — this is an ExtendedResolver where queries are translated to URLs for assets on IPFS which likely? are stored as pre-signed abi-encoded CCIP responses. The contract has a registry for (node → contenthash) rather than relying on a contract-level CCIP backend.

This is interesting! I like that it works with existing CCIP tech but I’m not sure I understand the "...json?t={data}&format=dag-json" use-case (unless this is being used for something I’m unaware of) — wouldn’t any endpoint that can (dynamically) respond to this, be better served by their own trusted CCIP setup? It seems like the static case (eg. pre-signed response data for text or addr record) is the primary use-case for this technique. However, assuming static data, couldn’t the contenthash requirement be relaxed to an arbitrary URL prefix? With the only requirement being wildcard CORS?

I was looking into plaintext/data URIs as contenthash so we could run

<0xaddr>.<balanceof>.mytoken.ethsubdomain resolver

I like this idea. This is related to NFTResolver.. Even something like text("eth.balance.raffy.svgpfp.eth", "avatar") → data:...<svg>0.1 eth</svg> would be cool (although there’s no specification for liveliness and many social applications that show avatars have caching mechanisms.)

I still think there’s value in having an On-chain resolver with individual records that can be stored Off-chain, compared to an Off-chain resolver with decentralized storage. For example, once you have external references, using IPFS becomes a personal choice.

One way of thinking of this, is as a generalization of the avatar string spec.

The issue with this approach is that you need some new standard (decentralized fetch + optional integrity/validation check) and you need an efficient way to encode resource identifiers.

For example, if a text("name.eth", "description") returned myipfs:story — why couldn’t that invoke fetch("https://name.eth.limo/story") client-side? Or mycontent:avatar.json → where contenthash is used instead of ens + dweb gateway?

For signing, I think all you need is a single bit in the reference, like myipfs:story?x, to indicate that the response should be interpreted as a signature + blob, which must validate against the records owner. Alternative checks might be integrity via hash, or checking a different signer, eg. NFT data signed by the EIP-173 owner().

My post above was one idea for compacting these references. Alternatively, since ENS is literally a system for naming things, I’d imagine ENS names themselves are a solution to this problem! Do we need ipfs://Qmumbojumbo if we have "abc.eth" + decentralized fetch?

If I understand this correctly — this is an ExtendedResolver where queries are translated to URLs for assets on IPFS

Correct, more specifically: on IPFS storage pointed to by an IPNS key, which makes the records freely upgradeable.

are stored as pre-signed abi-encoded CCIP responses

Correct. Responses contain the abi-encoded value pre-signed by the manager/owner of the name

The contract has a registry for (node → contenthash) rather than relying on a contract-level CCIP backend.

Correct. The intention was to remove the need for a CCIP backend and instead use upgradeable and secure decentralised storage.

Side-note: Contenthash in (node → contenthash) is global contenthash which contains the text records + the traditional ‘web’ contenthash (web3-hosted content by the user that we use today)

This is interesting! I like that it works with existing CCIP tech but I’m not sure I understand the

"...json?t={data}&format=dag-json"use-case

This is to fetch the latest version of the record resolved by the IPNS key since there may be older versions lingering on IPFS network during IPNS propagation (immediately after an update). IPNS propagation can take up to 10 minutes in extreme cases

wouldn’t any endpoint that can (dynamically) respond to this, be better served by their own trusted CCIP setup? It seems like the static case (eg. pre-signed response data for text or addr record) is the primary use-case for this technique. However, assuming static data, couldn’t the contenthash requirement be relaxed to an arbitrary URL prefix? With the only requirement being wildcard CORS?

Absolutely. Our primary intention is to remove any centralised pain points from the pipeline and make this a trust-free service. We use IPNS for upgradeability while leaving the IPNS private keys with the user and only handling the IPNS public key pinning on the network. This allows our service to function in a fully decentralised manner without any trust-based assumptions from either side. Someone else may choose their own web2 server or any generic endpoint in principle to broadcast the records dynamically. On the contract side, it will only take trivial changes to the code.

I still think there’s value in having an On-chain resolver with individual records that can be stored Off-chain, compared to an Off-chain resolver with decentralized storage. For example, once you have external references, using IPFS becomes a personal choice.

Indeed. For a public good that this service is, we didn’t want to handle any servers ourselves and IPFS was a natural choice. Other use-cases may use another mechanism to feed the CCIP call.

For example, if a

text("name.eth", "description")returnedmyipfs:story— why couldn’t that invokefetch("https://name.eth.limo/story")client-side? Ormycontent:avatar.json→ wherecontenthashis used instead of ens + dweb gateway?

Do we need

ipfs://Qmumbojumboif we have"abc.eth"+ decentralized fetch?

The reason behind the ipfs://Qmumbojumbo is that if we use the same eth.limo gateway to fetch records as well as the user’s regular web contenthash, we will end up in a situation where the user must ascertain that they do not remove the records by mistake when they make a contenthash update. This is highly inconvenient and fraught with risk; we don’t want the records and the regular web content in the same basket. We needed to separate the web contenthash from the global contenthash (that will be used to fetch the records + the web contenthash) to remove any interference between records and web contenthash. For this reason, we use IPFS gateways for resolving the records and leave the resolution of web contenthash to eth.limo gateway. The separation of gateways is necessary to break this undesirable cross-dependency.

I like that it works with existing CCIP tech but I’m not sure I understand the

"...json?t={data}&format=dag-json"use-case (unless this is being used for something I’m unaware of) — wouldn’t any endpoint that can (dynamically) respond to this, be better served by their own trusted CCIP setup?

?t={data} is for ttl/cache time of url, {data} is auto replaced during ccip-read’s GET request. Our current default value is abi.encodePacked(uint64(block.timestamp / 60) * 60).

&format=dag-json is there from our IPNS+IPLD tests comparing dag-cbor and dag-json. We were testing some “not implemented” features on public gateways that’s preventing our IPNS+IPFS and IPNS+IPLD feature in same resolver without that suffix format request. If we add format request it works for IPLD dag-cbor and breaks IPFS. We’re using IPNS+IPFS for now as it’ll be auto fixed once public gateways implement IPNS+IPLD to return normal IPLD dag v0/api’s format.

ENS DAO should send in some dedicated rockstar devs like you @raffy ![]() to explore

to explore dag-ens implementation for future & extend current dag-eth (IPLD ♦ Specs: DAG-ETH). ipld://b<mumbojumbo> everything. ![]() We don’t have active devs/funds & time to go full

We don’t have active devs/funds & time to go full dag-ens mode, it’d be fun reverse lookup from ipld://prefix+namehash to domain.eth &, add/update FULL ens records packed in for ENS specific dag format. I’ve some basic tests from 2020, way overconfident than now ![]() I was playing with wrong dag-pb… Add ENS Namehash by 0xc0de4c0ffee · Pull Request #184 · multiformats/multicodec · GitHub

I was playing with wrong dag-pb… Add ENS Namehash by 0xc0de4c0ffee · Pull Request #184 · multiformats/multicodec · GitHub

Even something like

text("eth.balance.raffy.svgpfp.eth", "avatar")→data:...<svg>0.1 eth</svg>would be cool (although there’s no specification for liveliness and many social applications that show avatars have caching mechanisms.)

“showcase” text is one example, we’re also working with @ethlimo.eth to add “headers” text records for gateways to read. It’s app/service specific text records and * wildcard subdomain service. eg, buy.domain.eth subdomain with contenthash and “buy” text records storing all required UI, contract details, required data/signatures/$price data to sell that domain. OR it could be more app-specific feature like ensvision.domain.eth contenthash and “ensvision” text records storing user’s buy/sell records and all metadata offchain.

We’ve few more non-ens record types implemented in .well-known dir format to use ENS domain as Bitcoin lightning address and Nostr’s NIP05 ID. Bluesky is all IPLD, we know IPFS limits without big cluster/servers providers+$big funds. After waiting years for proper pubsub/webrtc in browser ipfs-js clients, we’re now using Nostr’s public/private relays to propagate IPNS records faster between our backup republishing services running IPFS node locally. Current global propagation time is ~10~15 minutes for good client, & > 1hour with bad/crowded nodes and gateways, our next big target is ~60 seconds.