Brainstorming:

I agree resolution times matter however I think the only way we can accelerate crosschain/offchain names is by caching proofs, which is no easy task.

Urg (Unruggable gateways) proofs are two parts:

- the rollup proof (only depends on commitment)

- the request proof (state proofs for the request)

The rollup proof (1) is relatively easy to cache since it’s low frequency (hourly).

However, most of these proofs are only verifiable against the active rollup contract. The corresponding eth_call’s could be converted to eth_getProof + additional logic, which would make them resilient to upgrades and cyclical buffer usage. So it’s 100% possible to construct a timeless rollup proof, as long as it’s accompanied by a block inclusion proof.

EIP-2935 storage proofs can be chained together but doesn’t help much. 8191blocks * 12sec/block / 86400sec/day = 1.137 days so proving inclusion from a year ago is very costly. I tweeted a while ago that Ethereum needs some kind of mechanism to enable this (ie. a tower of history contracts).

The request proof (2) is much harder to cache w/o an specialized merkle database. An indexer could maintain a cache at a large storage cost but likely these need computed in real time.

Lastly, the indexer would have to prove that this cached proof is equivalent to the latest value. This requires the most recent version of (1) + some partial proof that the state hasn’t changed (eg. storageRoot is the same). If there’s no efficient way to verify this, then the cached proof has no benefit since if you have to prove the most recent value to show that the old value is the same, you might as well just prove the recent value.

I think, given all that:

- the rollup proof can always be prepared, cached, and available

- the rollup proof should be relative to the “latest” view of the rollup (finalized or whatever security we decide is necessary, eg. unfinalized 6 hrs for optimistic rollups.)

- the request proof is computed dynamically (main source of latency)

- various state proofs can be cached

- duplicate calls can be cached

This is exactly the feature set that Urg supports when --prefetch enabled.

For L2 reverse primary names, the typical request of reading name() for address A, already has a cached rollup proof and a cached account proof the registry contract (likely). The only cache miss is a proof is for the specific name(), which is either 1 proof (if name is less than 32 bytes) or 3+ proofs. So the typical latency for a gateway proof is one eth_getProof (with multiple slots) from a node + gateway latency.

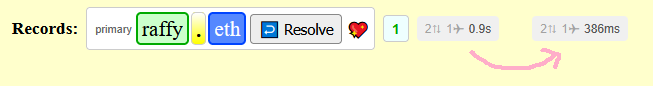

Here is a reverse lookup for 0x5105 on Base (Sepolia) that if you execute it multiple times, will have different latencies:

- rpc w/

OffchainLookup(assuming cloud provider) —150ms - gateway call —

100ms+ external calls:- no rollup cache —

1-3sec- depends on gateway configuration and rollup complexity

- rollup cache, no request cache —

< 1sec- sequential rpcs

- rollup cache, partial request cache —

< 250ms- one

eth_getProof

- one

- duplicate call —

0ms

- no rollup cache —

- rpc w/callback —

150ms

- Cold:

150ms + (100ms + 1-3sec) + 150ms = 2sec+ - Warm:

150ms + (100ms + 250ms) + 150ms = <1 sec - Hot:

150ms + (100ms + 0ms) + 150ms = ~400ms

ENSIP-21 once widely supported should eliminate a network hop in the UR.

And if we can figure out resolve(multicall) feature detection, the latency for multiple records should be improved since it goes from 2N rpc + 1N gateway calls to 2 + 1 independent of N.

I think there’s definitely an opportunity for a client to display an cached result from an HTTP service as fast as possible (from an indexer), and then follow up with UniversalResolver call for verification.