Hello ENS & ETH Developers ![]()

I attach the fourth draft incorporating comments from Nick, Makoto and others so far on Draft-3. This draft is mostly a re-structuring of previous version that:

- removes

metadata()interface (from Draft-3) and passes metadata to the client in the revert itself, and - includes the methods to interpret the metadata within this EIP instead of a separate ENSIP.

GitHub: Draft-4

EIP-5559: Off-Chain Data Write Protocol

Cross-Chain Write Deferral Protocol incorporating secure write deferrals to centralised databases and decentralised & mutable storages

Abstract

The following proposal is an update to EIP-5559: Off-Chain Write Deferral Protocol, targeting a wider set of storage types and introducing security measures to consider for secure off-chain write deferral and retrieval. While EIP-5559 is limited to deferring write operations to L2 EVM chains and centralised databases, methods in this document enable secure write deferral to generic decentralised storages - mutable or immutable - such as IPFS, Arweave, Swarm etc. This draft alongside EIP-3668 and EIP-5559 is a significant step toward a complete and secure infrastructure for off-chain data retrieval and write deferral.

Motivation

EIP-3668, or ‘CCIP-Read’ in short, has been key to retrieving off-chain data for a variety of contracts on Ethereum blockchain, ranging from price feeds for DeFi contracts, to more recently records for ENS users. The latter case is more interesting since it dedicatedly uses off-chain storage to bypass the usually high gas fees associated with on-chain storage; this aspect has a plethora of use cases well beyond ENS records and a potential for significant impact on universal affordability and accessibility of Ethereum.

Off-chain data retrieval through EIP-3668 is a relatively simpler task since it assumes that all relevant data originating from off-chain storages is translated by CCIP-Read-compliant HTTP gateways; this includes L2 chains, centralised databases or decentralised storages. On the flip side however, so far each service leveraging CCIP-Read must handle two main tasks externally:

-

writing this data securely to these storage types on their own, and

-

incorporating reasonable security measures in their CCIP-Read compatible contracts for verifying this data before performing on-chain read or write operations.

Writing to a variety of centralised and decentralised storages is a broader objective compared to CCIP-Read largely due to two reasons:

-

Each storage provider typically has its own architecture that the write operation must comply with, e.g. they may require additional credentials and configuration to able to write data to them, and

-

Each storage must incorporate some form of security measures during write operations so that off-chain data’s integrity can be verified by CCIP-Read contracts during data retrieval stage.

EIP-5559 was the first step toward such a tolerant ‘CCIP-Write’ protocol which outlined how write deferrals could be made to L2 and centralised databases. The cases of L2 and database are similar; deferral to an L2 involves routing the eth_call to L2, while deferral to a database can be made by extracting eth_sign from eth_call and posting the resulting signature along with the data for later verification. In both cases, no pre-flight information needs to be processed by the client and arguments of eth_call and eth_sign as specified in EIP-5559 are sufficient. This proposal extends the previous attempt by including secure write deferrals to decentralised storages, especially those which - beyond the arguments of eth_call and eth_sign - require additional pre-flight metadata from clients to successfully host users’ data on their favourite storage. This document also enables more complex and generic use-cases of databases such as those which do not store the signers’ addressess on chain as presumed in EIP-5559.

Curious Case of Decentralised Storages

Decentralised storages powered by cryptographic protocols are unique in their diversity of architectures compared to centralised databases or L2 chains, both of which have canonical architectures in place. For instance, write calls to L2 chains can be generalised through the use of chainId for any given callData; write deferral in this case is as simple as routing the eth_call to another contract on an L2 chain. There is no need to incorporate any additional security requirement(s) since the L2 chain ensures data integrity locally, while the global integrity can be proven by employing a state verifier scheme (e.g. EVM-Gateway) during CCIP-Read calls. Centralised databases have a very similar architecture where instead of invoking eth_call, the result of eth_sign needs to be posted on the database along with the callData for integrity verification by CCIP-Read.

Decentralised storages on the other hand, do not typically have EVM- or database-like environments and may have their own unique content addressing requirements. For example, IPFS, Arweave, Swarm etc all have unique content identification schemes as well as their own specific fine-tunings and/or choices of cryptographic primitives, besides supporting their own cryptographically secured namespaces. This significant and diverse deviation from EVM-like architecture results in an equally diverse set of requirements during both the write deferral operation as well as the subsequent state verifying stage.

For example, consider a scenario where the choice of storage is IPNS or ArNS. In precise terms, IPNS storage refers to immutable IPFS content wrapped in mutable IPNS namespace, which eventually serves as the reference coordinate for off-chain data. The case of ArNS is similar; ArNS is immutable Arweave content wrapped in mutable ArNS namespace. To write to IPNS or ArNS storage, the client requires more information than only the gateway URL responsible for write operations and arguments of eth_sign. More precisely, the client must at least prompt the user for their IPNS or ArNS signature which is necessary for updating the namespaced storage. The client may also require additional information from the user such as specific arguments required by IPNS or ArNS signature. One such example is the requirement of integer sequence of IPNS update which goes into the construction of signature message payload. These additional user-centric requirements are not accommodated by EIP-5559, and the resolution of these issues - among others such as batch writing - is detailed in the following attempt towards a suitable CCIP-Write specification.

Specification

Overview

The following specification revolves around the structure and description of an arbitrary off-chain storage handler tasked with the responsibility of writing to an arbitrary storage. First introduced in EIP-5559, the protocol outlined herein expands the capabilities of the StorageHandledByBob() revert to accept decentralised and namespaced storages. In addition, this draft proposes that StorageHandledByL2() and StorageHandledByOffChainDatabase() introduced in EIP-5559 be updated, and new StorageHandledByBob() reverts be allowed through new EIPs that sufficiently detail their interfaces and designs. Some foreseen examples of new storage handlers include StorageHandledByIPFS() for IPFS, StorageHandledByIPNS() for IPNS, StorageHandledByArweave() for Arweave, StorageHandledByArNS() for ArNS, StorageHandledBySwarm() for Swarm etc.

Similar to EIP-5559, a CCIP-Write deferral call to an arbitrary function setValue(bytes32 key, bytes32 value) can be described in pseudo-code as follows:

// Define revert event

error StorageHandledByBob(address sender, bytes callData, bytes metadata);

// Generic function in a contract

function setValue(

bytes32 key,

bytes32 value

) external {

// Get metadata from on-chain sources

bytes metadata = getMetadata(key);

// Defer write call to off-chain handler

revert StorageHandledByBob(

msg.sender,

abi.encode(key, value),

metadata

);

}

where, the following structure for StorageHandledByBob() must be followed:

// Details of revert event

error StorageHandledByBob(

address msg.sender, // Sender of call

bytes callData, // Payload to store

bytes metadata // Metadata required by off-chain clients

);

Metadata

The metadata type captures all the relevant information that the client may require to update a user’s data on their favourite storage. For instance, metadata must contain a pointer to a user’s data on their desired storage. In the case of StorageHandledByL2() for example, metadata must contain a chain identifier such as chainId and additionally the contract address. In case of StorageHandledByDatabase(), metadata must contain the custom gateway URL serving a user’s data. In case of StorageHandledByIPNS(), metadata may contain the public key of a user’s IPNS container; the case of ArNS is similar. In addition, metadata may further contain security-driven information such as a delegated signer’s address who is tasked with signing the off-chain data; such signers and their approvals must also be contained for verification tasks to be performed by the client. It follows that each storage handler StorageHandledByBob() must define the precise construction of metadata type in their documentation. Note that the metadata function doesn’t necessarily read any or all of the aforementioned metadata from the contract; it is possible that this metadata is in fact stored off-chain, in which case metadata type may instead revert with OffchainLookup() that the client must process. Some example constructions of metadata functions which support L2, databases, IPFS, Arweave, IPNS, ArNS and Swarm[?] are given below.

L2 Handler: StorageHandledByL2()

A mimimal L2 handler only requires the list of chainId values and the corresponding contract addresses and StorageHandledByL2() as defined in EIP-5559 is sufficient. In context of this proposal, chainId and contract must be returned by the metadata function. The deferral in this case will prompt the client to submit the transaction to the relevant L2 as returned by the metadata function. One example of an L2 handler’s metadata function is given below.

EXAMPLE

error StorageHandledByL2(address sender, bytes callData, bytes metadata);

(

address contractL2, // Contract address on L2

string chainId, // L2 ChainID

string metaEndpoint // Metadata API endpoint (optional)

) = getMetadata(node); // Arbitrary code

// contractL2 = "0x32f94e75cde5fa48b6469323742e6004d701409b"

// chainId = "21"

// metaEndpoint = "https://op.namesys.xyz" (optional)

bytes metadata = abi.encode(contractL2, chainId, metaEndpoint);

There may however arise a situation where a service first stores some data on L2 and then writes - asynchronously or otherwise - to another off-chain storage type. In such cases, the L2 contract should implement a second off-chain write deferral after making desired local state changes. This in principle allows creation of chained storage handlers without explicitly introducing a callback function in this proposal.

Database Handler: StorageHandledByDatabase()

A minimal database handler is similar to an L2 in the sense that:

a) it requires the gatewayUrl responsible for handling off-chain write operations (similar to chainId), and

b) it should require eth_sign output to secure the data and the client must prompt the users for these signatures (similar to eth_call).

In this case, the metadata must contain the bespoke gatewayUrl and may additionally contain the addresses of dataSigner of eth_sign. If a dataSigner is included in the metadata, then the client must make sure that the signature forwarded to the gateway is signed by that dataSigner. One example of a database handler’s metadata function is given below.

EXAMPLE

error StorageHandledByDatabase(address sender, bytes callData, bytes metadata);

(

string gatewayUrl, // Gateway URL

address dataSigner, // Ethereum signer's address

string metaEndpoint // Metadata API endpoint (optional)

) = getMetadata(node);

// gatewayUrl = "https://api.namesys.xyz"

// dataSigner = "0xc0ffee254729296a45a3885639AC7E10F9d54979"

// metaEndpoint = "https://db.namesys.xyz" (optional)

bytes metadata = abi.encode(gatewayUrl, dataSigner, metaEndpoint);

In the above example, the client must first verify that the eth_sign is signed by a matching dataSigner, then prompt the user for a signature and finally pass the resulting signature to the respective gateway URL. The message payload for the signature in this case may be formatted as per EIP-712, as detailed in EIP-5559. Some storage handlers may however choose simple string formatting as long as it is properly documented in their documentation. This proposal leaves this aspect of off-chain metadata construction to storage handlers and individual ecosystems.

Decentralised Storage Handlers

Decentralised storages are the extremest in the sense that they come both in immutable and mutable form; the immutable forms locate the data through immutable content identifiers (CIDs) while mutable forms utilise some sort of namespace which can statically reference any dynamic content. Examples of the former include raw content hosted on IPFS and Arweave while the latter forms use IPNS and ArNS namespaces respectively to reference the raw and dynamic content.

The case of immutable forms is similar to a database although these forms are not as useful in practise so far. This is due to the difficulty associated with posting the unique CID on chain each time a storage update is made. One way to bypass this difficulty is by storing the CID cheaply in an L2 contract; this method requires the client to update the data on both the decentralised storage as well as the L2 contract through two chained deferrals. CCIP-Read in this case is also expected to read from two storages to be able to fully handle a read call. Contrary to this tedious flow, namespaces can instead be used to statically fetch immutable CIDs. For example, instead of a direct reference to immutable CIDs, IPNS and ArNS public keys can instead be used to refer to IPFS and Arweave content respectively; this method doesn’t require dual deferrals by CCIP-Write (or CCIP-Read), and the IPNS or Arweave public key needs to be stored on chain only once. However, accessing the IPNS and ArNS content now requires that the client must prompt the user for additional information via context, e.g. IPNS and ArNS signatures in order to update the data.

Decentralised storage handlers’ metadata interface is therefore expected to return additional context which the clients must interpret and evaluate before calling the gateway with the results. This feature is not supported by EIP-5559 and services using EIP-5559 are thus incapable of storing data on decentralised namespaced & mutable storages. One example of a decentralised storage handler’s metadata function for IPNS is given below.

EXAMPLE: StorageHandledByIPNS()

error StorageHandledByIPNS(address sender, bytes callData, bytes metadata);

(

string gatewayUrl, // Gateway URL

address dataSigner, // Ethereum signer's address

bytes ipnsSigner, // Context for namespace (IPNS signer's hex-encoded CID)

string metaEndpoint // Metadata API endpoint (optional)

) = getMetadata(node);

// gatewayUrl = "https://ipns.namesys.xyz"

// dataSigner = "0xc0ffee254729296a45a3885639AC7E10F9d54979"

// ipnsSigner = "0xe50101720024080112203fd7e338b2de90159832ffcc434927da8bbfc3a000fa58ea0548aa8e08f7e10a"

// metaEndpoint = "https://gql.namesys.xyz" (optional)

bytes metadata = abi.encode(gatewayUrl, dataSigner, ipnsSigner, metaEndpoint);

In this example, the client must process the context according to the specifications of the StorageHandledByIPNS() identifier. For instance, the client must request the user for an IPNS signature verifiable against the signer’s CID returned in context. The client additionally needs a sequence counter representing IPNS record version which it should fetch from the metaEndpoint. The clients should then evaluate the context by feeding the sequence counter to the message payload and then obtaining the resulting IPNS signature. This signature must then be passed to the gateway among other arguments.

Interpreting Metadata

The methods described in this section have been designed with autonomy, privacy, UI/UX and accessibility for ethereum users in mind. The plethora of off-chain storages have their own diverse ecosystems such that it in not uncommon for each storage to have its own set of UI/UX requirements, such as wallets, signer extensions etc. If ethereum users were to utilise such storage providers, they will inevitably be subjected to additional wallet extensions in their browsers. This is not ideal and the methods in this section have been crafted such that users do not need to install any additional UI/UX components or extensions other than their favourite ethereum wallet.

StorageHandledByIPNS() is more complex in construction than StorageHandledByDatabase() which is a reduced version of the former. For this reason, we still start by describing how clients should implement StorageHandledByIPNS() first. Later on, we will reduce the requirements to the simpler case of StorageHandledByDatabase().

Key Generation

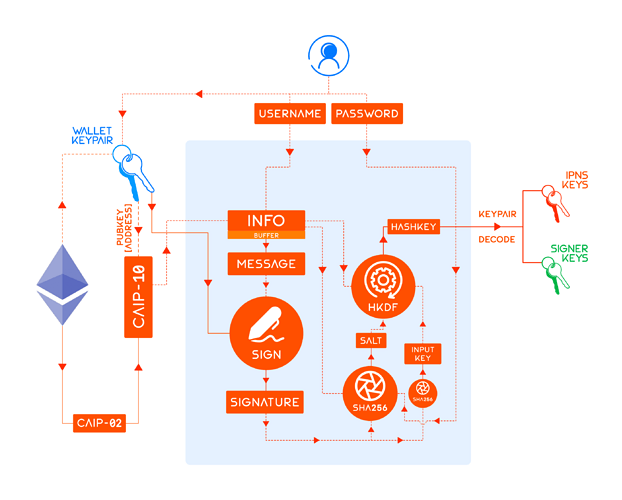

This draft proposes that both the dataSigner and ipnsSigner keypairs be generated deterministically from ethereum wallet signatures; see figure below.

This process involving deterministic key generation can be implemented concisely in a single unified keygen() function (available in namesys.js library) as follows.

import { hkdf } from "@noble/hashes/hkdf";

import { sha256 } from "@noble/hashes/sha256";

import * as secp256k1 from "@noble/secp256k1";

import * as ed25519 from "@noble/ed25519";

/**

* @param username Key identifier

* @param caip10 CAIP identifier for the blockchain account

* @param signature Deterministic signature from X-wallet provider

* @param password Optional password

* @returns Deterministic private/public keypairs as hex strings

* Hex-encoded

* [ ed25519.priv, ed25519.pub],

* [secp256k1.priv, secp256k1.pub]

*/

export async function keygen(

username: string,

caip10: string,

signature: string,

password: string | undefined

): Promise<[[string, string], [string, string]]> {

// Signature must be at least of length 64

if (signature.length < 64)

throw new Error("SIGNATURE TOO SHORT; LENGTH SHOULD BE 65 BYTES");

// Calculate input key by hashing signature bytes using sha256 algorithm

let inputKey = sha256(

secp256k1.utils.hexToBytes(

signature.toLowerCase().startsWith("0x") ? signature.slice(2) : signature

)

);

// Calculate info from CAIP-10 identifier and username

let info = `${caip10}:${username}`;

// Calculate salt for keygen by hashing concatenated info, password and hex-encoded signature using sha256 algorithm

let salt = sha256(

`${info}:${password ? password : ""}:${signature.slice(-64)}`

);

// Calculate hash key output by feeding input key, salt and info to the HMAC-based key derivation function

let hashKey = hkdf(sha256, inputKey, salt, info, 42);

// Convert hash key to a private scalar for ed25519 elliptic curve

let ed25519priv = ed25519.utils

.hashToPrivateScalar(hashKey)

.toString(16)

.padStart(64, "0"); // ed25519 Private Key

// Get public key by evaluating private scalar over ed25519 elliptic curve

let ed25519pub = secp256k1.utils.bytesToHex(

await ed25519.getPublicKey(ed25519priv)

); // ed25519 Public Key

// Convert hash key to a private key for secp256k1 elliptic curve

let secp256k1priv = secp256k1.utils.bytesToHex(

secp256k1.utils.hashToPrivateKey(hashKey)

); // secp256k1 Private Key

// Get public key by evaluating private key over secp256k1 elliptic curve

let secp256k1pub = secp256k1.utils.bytesToHex(

secp256k1.getPublicKey(secp256k1priv)

); // secp256k1 Public Key

// Return both ed25519 and secp256k1 key types for IPNS and ethereum signers respectively

return [

// Hex-encoded [[ed25519.priv, ed25519.pub], [secp256k1.priv, secp256k1.pub]]

[ed25519priv, ed25519pub],

[secp256k1priv, secp256k1pub],

];

}

This keygen() function requires four variables: caip10, username, password and signature. Their descriptions are given below.

CAIP-10

CAIP-10 identifier caip10 is auto-derived from the connected wallet’s checksummed address wallet and chainId.

// CAIP-10 identifier

const caip10 = `eip155:${chainId}:${wallet}`;

Username

username may be prompted from the user by the client or determined by the protocol. This public field allows users to switch their protocol-specific IPNS namespace in the future. For instance, protocols may set username deterministically as equal to caip10 or some protocol-specific function of node; see example below.

// Username is dependent on the storage type which can be 'WalletType' or

// 'NodeType'. See definitions at the end of this section.

// Example: node = namehash(normalise(ens)) for ENS, aka preimage(node) = ens

let username;

if (storage === 'WalletType') username = caip10;

if (storage === 'NodeType') username = preimage(node);

Password

password is a private field and it must be prompted from the user by the client; this field allows users to secure their IPNS namespace for a given username.

// IPNS secret key identifier; clients must prompt the user for this

const password = 'key1';

Deterministic Signatures

Deterministic signatures form the backbone of secure, keyless, autonomous and smooth UI when off-chain storages are in the mix. In the simplest implementation, at least two separate signatures need to be prompted from the users by the clients: SIG_IPNS & SIG_SIGNER.

a. SIG_IPNS for IPNS Keygen

SIG_IPNS is the deterministic ethereum signature responsible for IPNS key generation and for interpreting ipnsSigner metadata. Message payload for SIG_IPNS must be formatted as:

Requesting Signature To Generate IPNS Key\n\nOrigin: ${username}\nKey Type: ${keyType}\nExtradata: ${extradata}\nSigned By: ${caip10}

b. SIG_SIGNER for Signer Keygen

SIG_SIGNER is the deterministic ethereum signature responsible for universal signer key generation. In order to enable batch data writing for multiple nodes, a universal signer must be derived from the owner or manager keys of a node. This signer is tasked with interpreting dataSigner metadata. Message payload for SIG_SIGNER must be formatted as:

Requesting Signature To Generate Data Signer\n\nOrigin: ${username}\nKey Type: ${keyType}\nExtradata: ${extradata}\nSigned By: ${caip10}

In both SIG_IPNS and SIG_SIGNER signature payloads, the extradata is calculated as

// Calculating extradata in keygen signatures

bytes32 extradata = keccak256(

abi.encodePacked(

keccak256(

abi.encodePacked(password)

wallet

)

)

);

and keyType is currently ed25519 for SIG_IPNS as required by IPNS and secp256k1 for SIG_SIGNER since it is an ethereum signer. In the future, IPFS network plans to phase in secp256k1 key types at which point ed25519 key derivation won’t be necessary.

With these deterministic formats for signature message payloads, the client must prompt the user for two eth_sign signatures. Once the user signs the messages, the keygen() function can derive the IPNS keypair and the signer keypair. The clients must additionally derive the IPNS CID and ethereum address corresponding to the IPNS and signer public keys (implemented in namesys.js library). The metadata interpretation concludes with the client ensuring that

- the derived IPNS CID must match the

ipnsSignermetadata, and - the derived signer’s address must match the

dataSignermetadata.

If these conditions are not met, clients must throw an error and inform the user of failure in interpretation of the metadata. If these conditions are met, then the client has the correct private keys to update a user’s IPNS record as well as sign a user’s data for later verification by CCIP-Read. Since the derived signer can sign multiple instances of off-chain data in the background without prompting the user, it is possible to update data for multiple nodes simultaneously with this method.

Storage Types

Storage types refer to two types of IPNS namespaces that can host a user’s data. In the first case of NodeType, each node has a unique IPNS container whose CID is stored in ipnsSigner metadata. In the second case of WalletType, a user can store the data for all nodes owned or managed by a given wallet. Naturally, the second method is highly cost effective although it compromises on security to some extent; this is due to a single IPNS signer manifesting as a single point of compromise for all off-chain data for a wallet. This feature is achieved by choosing an appropriate username in the signature message payload of SIG_IPNS depending on the desired storage type. Similar cost-effectiveness can be achieved for the dataSigner metadata as well by choosing WalletType over NodeType when deriving SIG_SIGNER.

Revert StorageHandledByDatabase()

The case of StorageHandledByDatabase() handler is a subset of the decentralised storage handler, in the sense that the clients should simply skip interpreting IPNS related metadata. This avoids having to derive SIG_IPNS and there is no concept of storage types for off-chain database handlers. Other than that, the entire process is the same as StorageHandledByIPNS().

Off-Chain Signers

It is possible to further save on gas costs by not storing the dataSigner metadata on chain. Services or users can instead post an approval for the dataSigner signed by the owner or manager of a domain along with the off-chain data. CCIP-Read can then verify this approval during resolution time and no on-chain dataSigner needs to be saved. This additional saving comes at the cost of one additional approval signature SIG_APPROVAL that the clients must prompt from the user. This signature must have the following message payload format:

Requesting Signature To Approve Data Signer\n\nOrigin: ${ens}\nApproved Signer: ${dataSigner}\nApproved By: ${caip10}

where dataSigner must be checksummed.

Data Signatures: SIG_DATA

Signature(s) SIG_DATA accompanying the off-chain data must implement the following format in their message payloads:

Requesting Signature To Update Off-Chain Data\n\nOrigin: ${ens}\nData Type: ${dataType}\nExtradata: ${extradata}\nSigned By: ${caip10}

where extradata must be calculated as follows,

// Extradata in record signatures

bytes memory dataBytes = abi.encodePacked([type, dataValue])

bytes32 extradata = utils.bytesToHexString(

abi.encodePacked(

keccak256(

dataBytes

)

)

);

where,

- the

dataTypeparameters are protocol-specific; they are defined in ENSIP-6 and ENSIP-9 for ENS, e.g.text/avatar,address/60etc, and - the

typeis simply the solidity data type of the record value.

CCIP-Read Compatible Payload

The final data: payload in the off-chain record file could then follow this format,

let encodedRecord = ethers.utils.defaultAbiCoder.encode([type], [dataValue]);

let encodeWithSelector = interface.encodeFunctionData("signedRecord", [

dataSigner, // type 'address'

SIG_DATA, // type 'bytes'

SIG_APPROVAL, // type 'bytes'

encodedRecord // dynamic type

]);

which the CCIP-Read-enabled resolvers should first correctly decode, and then verify signer approval and record signatures, before resolving the record value.

New Revert Events

-

Each new storage handler must submit their

StorageHandledByBob()identifier through an ERC track proposal referencing the current draft and EIP-5559. -

Each

StorageHandledByBob()provider must be supported with detailed documentation of its structure and the necessarymetadatathat its implementers must return. -

Each

StorageHandledByBob()proposal must define the precise formatting of any message payloads that require signatures and complete descriptions of custom cryptographic techniques implemented for additional security, accessibility or privacy.

Implementation featuring ENS

ENS off-chain resolvers capable of reading from and writing to decentralised storages are perhaps the most complex use-case for CCIP-Read and CCIP-Write. One example of such a (minimal) resolver is given below:

interface iResolver {

// Defined in EIP-5559

error StorageHandledByIPNS(

address sender,

bytes callData,

bytes metadata

);

// Defined in EIP-137

function setAddr(bytes32 node, address addr) external;

}

// Defined in EIP-5559

string public gatewayUrl = "https://api.namesys.xyz";

string public metaEndpoint = "https://gql.namesys.xyz";

/**

* Sets the ethereum address associated with an ENS node

* [!] May only be called by the owner or manager of that node in ENS registry

* @param node Namehash of ENS domain to update

* @param addr Ethereum address to set

*/

function setAddr(

bytes32 node,

address addr

) authorised(node) {

// Get ethereum signer & IPNS CID stored on-chain with arbitrary logic/code

// Both may be unique to each name, or each owner or manager address

(address dataSigner, bytes ipnsSigner) = getMetadata(node);

// Construct metadata required by off-chain clients. Clients must refer to ENSIP-Y for directions to interpret this metadata

bytes memory metadata = abi.encode(

gatewayUrl, // Gateway URL tasked with writing to IPNS

dataSigner, // Ethereum signer's address

ipnsSigner, // IPNS signer's hex-encoded CID as context for namespace

metaEndpoint // GraphQL metadata endpoint (required by ENSIP-16)

)

// Defer to IPNS storage

revert StorageHandledByIPNS(

msg.sender,

abi.encode(node, addr),

metadata

);

}