Well, for a start it would make certain records that are valid in DNS (mostly service records as you observe, but it’s not limited to that) impossible to import into ENS via the DNSSEC integration.

Well, the advantage of allowing new Unicode/ASCII symbols is probably not obvious at first glance. As you could observe with emojis, a community formed around their use much later, which was totally unexpected and yet is now one of the main features in the entire community.

The same is to be expected with “_” or “$” or other ASCII symbols. The latter also makes sense because currencies are one of the main applications of Ethereum/Crypto.

Especially in the area of subdomains, for example “Ξ.kraken.eth”, “€.kraken.eth”, “$.kraken.eth” are certainly a very good application in terms of wallet applications for stablecoins or exchange wallets. But that is only one of many applications that could be possible.

Similarly, domain names with underscores are another creative freedom in choosing one’s Web 3.0 identity to give to the community, besides the technical reasons Nick mentioned. They do fit in nicely in my opinion as rank.eth pointed out aswell.

The point is that we can’t know in advance what creative developments will come up, but we should at least give the community the opportunity to use these symbols without being overly restrictive, this is what makes ENS so different and successful.

I think Raffy made a good point in his other thread. We won’t be able to prevent the number of domains that clash with Web 2.0 DNS from increasing anyway, as people will surely come up with the idea of simply registering punycode literals in the future (why wouldn’t they), that alone will lead to the list of incompatible domains getting bigger, besides the fact that different browsers use different standards for insufficiently supported emojis/Unicode anyway. However, as the number of these domains is very small in the overall context, this is not a problem in itself.

The solution, as Raffy has already mentioned, is to tell the user at the time of registration whether the domain is compatible with traditional DNS or not. But the solution, in my opinion, is not to live up to old, worn-out rules forever and ever, the importance of which will continue to diminish in a Web 3.0 context anyway.

Hey guys,

Great discussion and insights in here.

Here are the 2 cents from a user perspective coming also from talks with other ENS users.

This is to me a great and essential point.

I hear the web2 / web3 compatible path with DNS and stuff, but right now how many people are using those features? like how many linked their web2 domains, emails…to their ENS web3 counterparts?

It seems to me that the main utility for ENS domains is / might remain to have a short or/and intelligible domain, serving both as a pseudo and an easy to way to share your wallet address instead of the random ETH address string of chars.

If datas show clearly that this is the main utility, development and decision should be made in consequences.

This fixes greatly the compatibility issue.

People who are just looking for a domain with 100% web3 usage won’t bother about this.

And if they want something web2/web3 friendly they will be warned before buying.

I’m only seeing a win-win situation here but I might be blind from specific technical issues.

Would allowing symbols that are non DNS compliant also open to window to 1-2 character ENS names ?

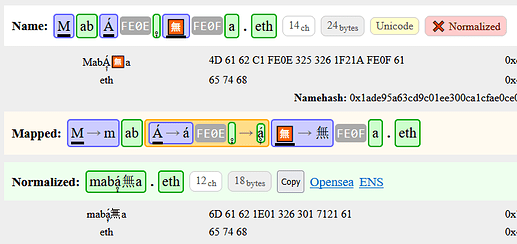

I updated @adraffy/ens-normalize.js repo to match my ENSIP. It passes all validation tests and is identical to reference implementation when run head-to-head on all registered names (although the format of this report needs improved) and is 20KB.

I also updated the resolver demo to the latest version.

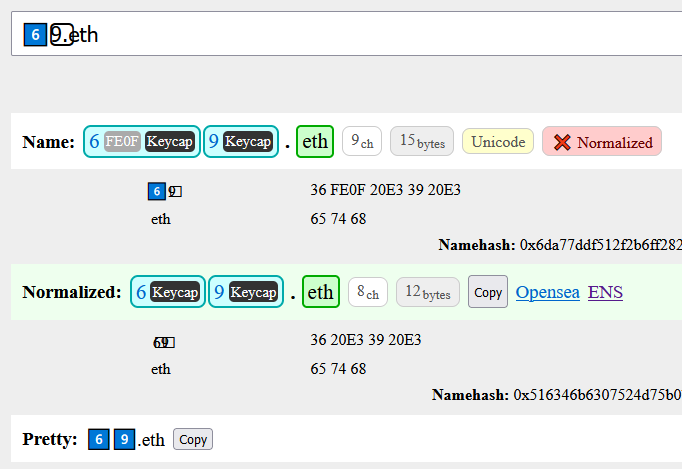

New feature: Mapped — shows where transformations are happening:

(mappings: purple, NFC: orange)

New feature: Pretty — emoji are replaced with their fully-qualified forms without changing the normalization

eg. norm(beautify(name)) == norm(name).

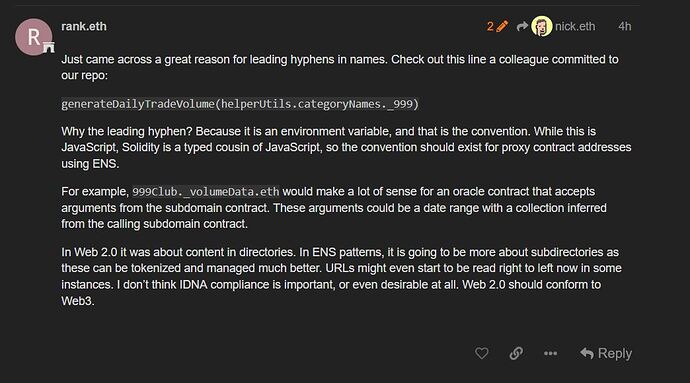

Just came across a great reason for leading underscores* in names. Check out this line a colleague committed to our repo:

generateDailyTradeVolume(helperUtils.categoryNames._999)

Why the leading underscore*? Because it is an environment variable, and that is the convention. While this is JavaScript, Solidity is a typed cousin of JavaScript, so the convention should exist for proxy contract addresses using ENS.

For example, 999Club._volumeData.eth would make a lot of sense for an oracle contract that accepts arguments from the subdomain contract. These arguments could be a date range with a collection inferred from the calling subdomain contract.

In Web 2.0 it was about content in directories. In ENS patterns, it is going to be more about subdirectories as these can be tokenized and managed much better. URLs might even start to be read right to left now in some instances. I don’t think IDNA compliance is important, or even desirable at all. Web 2.0 should conform to Web3.

Not sure if it’s just not showing, even though hyphens usually show, but I see no hyphen here

I see a leading underscore, but no hyphen

Does this just prove my point yet again that hyphens should be mapped to underscores as it’s a confusable ???

im assuming a lot of changes are being made to what is possible with this normalization update.

is the ultimate objective to allow anything that says it is normalized here: ENS Resolver ?

I am curious as to how many changes are going to be made.

the underscore being added and the hyphen being able to be placed at the beginning or end of a domain are two of many changes that seem to work (says its normalized) when inputed in the tool made by raffy.

I know the “$” is planned to be allowed as well, with the “£” “€” and “¥” allowed, it makes sense.

QUESTION: will these currency characters be allowed with letters as well? not only with numbers as they are right now? for me this seems logical as emojis are allowed to be used with all characters (numbers/letters/hyphens…). also they go through as normalized with raffy’s tool.

If there are any other compelling additions/changes being made, please let me know as I am curious.

also, if the major point of all this is to expand the scope of possibilities, then I see this as beneficial on the long term for ENS users. the only things we should be concerned with is if there are any major (specifically technical) issues that arise from adding new symbols/characters.

I edited. I meant underscores. ![]()

The mistake is from thinking in multiple languages, not confusion of the symbol. In my mind I would only make a mistake in language.

I will take your idea as truth though and argue the points against it. A leading hyphen is a sign for numbers, and in JavaScript you can coerce types so you could give it meaning in a string too if you wanted to be mean.

The problem I would have with mapping symbols is that unicode alternates like capital lowercase letters and letters with diacritical marks are allowed, and people are being brutally scammed without even a peep from the biggest ENS holders about revising this problem. I agree with Nick.eth about just saying screw-it and allowing it all.

_fate.eth, c++.eth, -40.eth, and united_states are all invalid. It’s not logical why besides compliance with Web 2.0.

If I had to choose between hyphens and underscores for spaces, I would choose underscores all day long. Hyphens imply a conjunction, while underscores are a long used practice in file naming so you can put spaces in file names.

My argument is that leading or trailing with characters not allowed in Web 2.0 is not relevant. ENS allows every language (finally!), and makes life more inclusive. Why allow one but not the other?

As a programmer I would at least appreciate the underscore. If names that are not compliant with Web 2.0 cause some issues, fine, that’s a limitation of Web 2.0. Web 3 projects shouldn’t be molding themselves to Web 2.0 practices that are born out long expired workarounds. I was online before Unicode even existed, which is when all of these naming conventions were solidified. Although Unicode technically existed when the WWW started, the WWW was always just ASCII. Nice system, but we have a better one now!

I know the “$” is planned to be allowed as well, with the “£” “€” and “¥” allowed, it makes sense.

$ in particular is also a programming convention in many languages. If underscores are allowed, $ should be as well, and I don’t see why any currency symbol should be disallowed for many reasons.

I know what you meant, it was just a mistake

And from someone who I’m guessing knows how to program

But it shows how easy it is to do……

Point proven……

In my view ENS should be made for the masses, not just people who sit in front of a computer every day, all day working

You had already edited it 2 times before my screenshot but hadn’t picked up on that mistake

Do you believe your ENSIP is ready to be advanced for standardisation?

Do you have an updated report of names that are invalidated or change their normalisation as a result of the new standard? I can send you a new list of registered names if need be.

Transposition is terrifyingly easy, I absolutely agree 100%!

That’s why I think popularizing ENS = Ethereum address as an absolute is dangerous. I’ve sent things to the wrong address from unchecksummed OCR before (meaning it was all lowercase in the address). It’s not good enough just to have an address, you need the underlying checksummed Ethereum address always printed as a crosscheck. Both to validate that the dapp developer didn’t fat finger something themselves, and to check you didn’t do something wrong.

Whatever the result of normalization now or in the future, we need better filters and alerts to counterfeit names. In Arabic numbers it’s a real problem in marketplaces because the default alert is meaningless. For most numbers, Urdu, Kurdish, and Farsi are written exactly the same as Arabic. This is leading to scams. There should probably be established language group flags output.

Edit: And in programming, every single developer will be very suspicious if their code compiles the first time. It’s scary when that happens. Then you ask yourself what you did wrong to make it work first try. It’s much more reassuring when the compiler yells at you for a missing semicolon or extra bracket a couple times.

The changes I’m proposing (which ens-normalize.js, norm-ref-impl.js, and the resolver follow) are outlined in my ENSIP draft. They’re are actually pretty minimal from what I was originally proposing (invaliding ~2% of names).

Report: ens_normalize_1.5.0_vs_eth-ens-namehash_2.0.15 | Directory of Tests

I have an updated list but I might be missing some.

Pretty much. Give me this evening to review the language in the ENSIP.

The latest ens_tokenize has the NFC Quick Check logic that only runs NFC on the minimal parts of the string. That’s the only piece of the solidity contract was missing to be a 100% match.

Only $ sign to be normalized? what about the other currencies £¥€₿?

They were already valid in IDNA 2003 / EIP-137. They aren’t valid in IDNA 2008 (which is why there is confusion.)

Validation will happen after normalization. The underlying idea is simple: each label gets checked to see if it’s safe for use. Potentially there is a full name check.

It’s hard to state exactly what names are safe but it’s easy to chip away at it. I think everyone agrees DNS labels are safe. So we can write a validator which returns true for names composed of DNS labels. From my calculations above, that’s 95% of names.

Each validator is simple to write because it doesn’t need to process emoji, it only deals with normalized characters, and most validators are single-script. There also is an efficient way to determine which validators could apply.

There is a question of what to do with this information.

- For contracts or headless scenarios, you either just normalize (throw on invalid, allow unsafe) or normalize with the ENS recommendation (throw on invalid or unsafe)

- For user input, like text fields, unsafe names should show a warning, but the name should still work if the user acknowledges. This requires a change to the ENS recommendation and various applications.

- A power-user feature might be to limit the accepted charsets: maybe you want to be more strict than the ENS recommendation and warn on everything that isn’t composed of DNS labels. This could occur at the user-input level (“I want metamask to warn non-DNS names”) or at the application level (

*.cb.idmight just be strict DNS.)

I see that there are domains with capital letters registered in this list. one being the same as an other $sbux and $SBUX. just to let you know.

That is not an error, that just means someone has manually registered the capital characters against the smart contracts. The name with uppercase characters will just normalize to the correct name with lowercase characters in all client wallets/sites.And that is true today as well, even before any of this updated normalization code goes into effect.

ok. thank you. so does that mean two wallets will own the same domain?

No, it means that if you use “NAME.eth” in Metamask, it’s actually going to send to “name.eth”.

So you can register “NAME.eth” manually if you want, but it’ll be essentially useless to you.

ok i see. thx for the info