I’ve got a few remaining questions regarding normalization.

-

The UTS-46 spec makes a distinction between “registration” and “lookup”, where stricter rules apply during registration. Since ENS is decentralized, aren’t these the same? I feel like the goal here should be have a single procedure that takes user input and makes it standard across all applications and platforms.

-

One example of this difference is ContextO (Rules #3-#9). Should ENS name normalization follow ContextO? For reference, ContextJ should definitely be used since it prevents ZWJ abuse. However, the spec says ContextO is for registration, not lookup.

-

Does anyone have experience with bidirectional characters enough to form an opinion regarding the

check_bidiflag in UTS-46? Essentially, when enabled, labels (the characters between stops in a domains name) can only contain strictly LTR or RTL characters. I have a few bidi examples in my resolver demo for reference. Mixed direction text is user-hostile IMO but maybe there’s a use-case for mixing something like english and hebrew inside of a single label? -

UTS-46 operates on unescaped Unicode sequences. Should the normalization process be knowledgeable-of and expand HTML, Unicode, and URI escapes? UTS #46: Unicode IDNA Compatibility Processing I suggest that every ENS accepting input field should automatically translate these escapes.

Edit 3: I now understand that escapes can be dangerous because they can be nested, as you can escape the escape characters. UTS-46 poorly addresses this problem because punycode can expand to more punycode if CheckHyphens is false. -

My library already passes 100% of IDNATestV2 yet it appears that this test is insufficient, as I’ve just discovered that IDNA2008 disallows more characters (see NV8 and XV8) than I was aware. Should we follow IDNA2008? From my understanding, UTS-46 w/transitional=false should follow IDNA2008.

Edit: it appearsNV8can’t be used exactly as the spec suggests, because it contains emoji.XV8only contains one character,0x19DA = ᧚.

Edit 2:NV8contains 8759 characters, except for emoji, I think most these should be disallowed. Example:˃˃˃.eth -

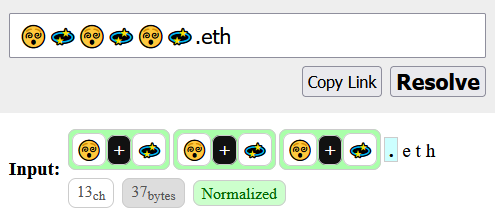

Lastly, please take a look this post in the emoji thread regarding treating the presence or absence of ZWJ inside emoji sequences as separate entities.

Any thoughts would be greatly appreciated.

A few prior threads for reference: